Build High-Performing Application Platforms, Expertly Crafted.

I simplify Kubernetes and create self-serviced Cloud Native platforms for developers.

See My ServicesSolutions

Find solutions to common problems.

Training

Learn to apply technology and practices.

Consulting

Let's work together.

Cloud Native Technology Applied

Addressing software delivery and platform management challenges with the most innovative solutions.

View Solutions

Expertise. Passion. Empathy.

I'm an Independent Consultant & Platform Architect.

The technology is already here. All that remains is to apply it appropriately, taking into account the context, constraints and goals of the organization. I can help you with that.

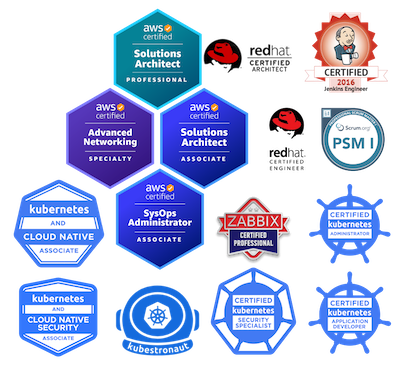

Proven Skills

I use my natural passion for learning and problem-solving in every project, which in turn has pushed me towards certification and I have been able to earn many industry-recognized certifications.

Validated Technology

I specialise in the area of DevOps and Platform Engineering. I use Kubernetes and other Cloud Native technologies to help my clients build reliable application platforms maintained entirely from code (GitOps/Everything As Code).

See My Services

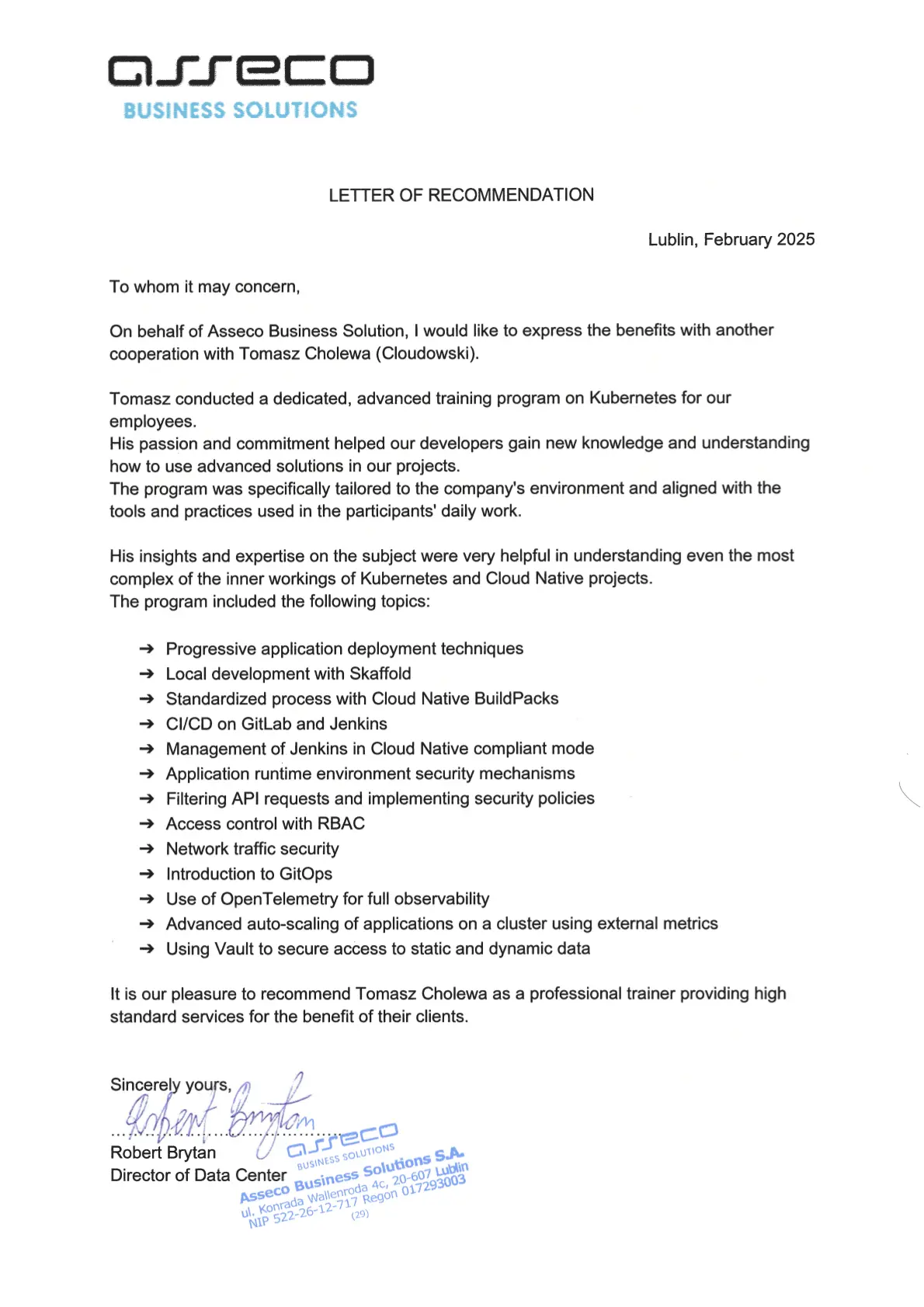

What My Clients Said

Why Work With Me?