Rozmowy o bezpieczeństwie nie muszą być nudne, szczególnie jeśli są ciekawi goście tacy jak Andrzej Dyjak.Usiedliśmy razem z Andrzejem i porozmawialiśmy o ciekawych tematach z obszaru bezpieczeństwa nowoczesnych platform dla aplikacji.Posłuchaj nas i dowiedz się między innymi: Dlaczego w mniejszych firmach bezpieczeństwo często nie jest priorytetem? Na czym polegał jeden z najbardziej zuchwałych ataków ostatnich […]

How to build CI/CD pipelines on Kubernetes

Kubernetes as a standard development platform We started with single, often powerful, machines that hosted many applications. Soon after came virtualization, which didn’t actually change a lot from a development perspective but it did for the field of operations. So developers became mad, and that’s when the public cloud emerged to satisfy their needs instead […]

Jenkins on OpenShift – how to use and customize it in a cloud-native way

I can’t imagine deployment process of any modern application that wouldn’t be orchestrated by some kind of pipeline. It’s also the reason why I got into containers and Kubernetes/OpenShift in the first place – it enforces changes in your approach toward building and deploying but it makes up for with all these nice features that […]

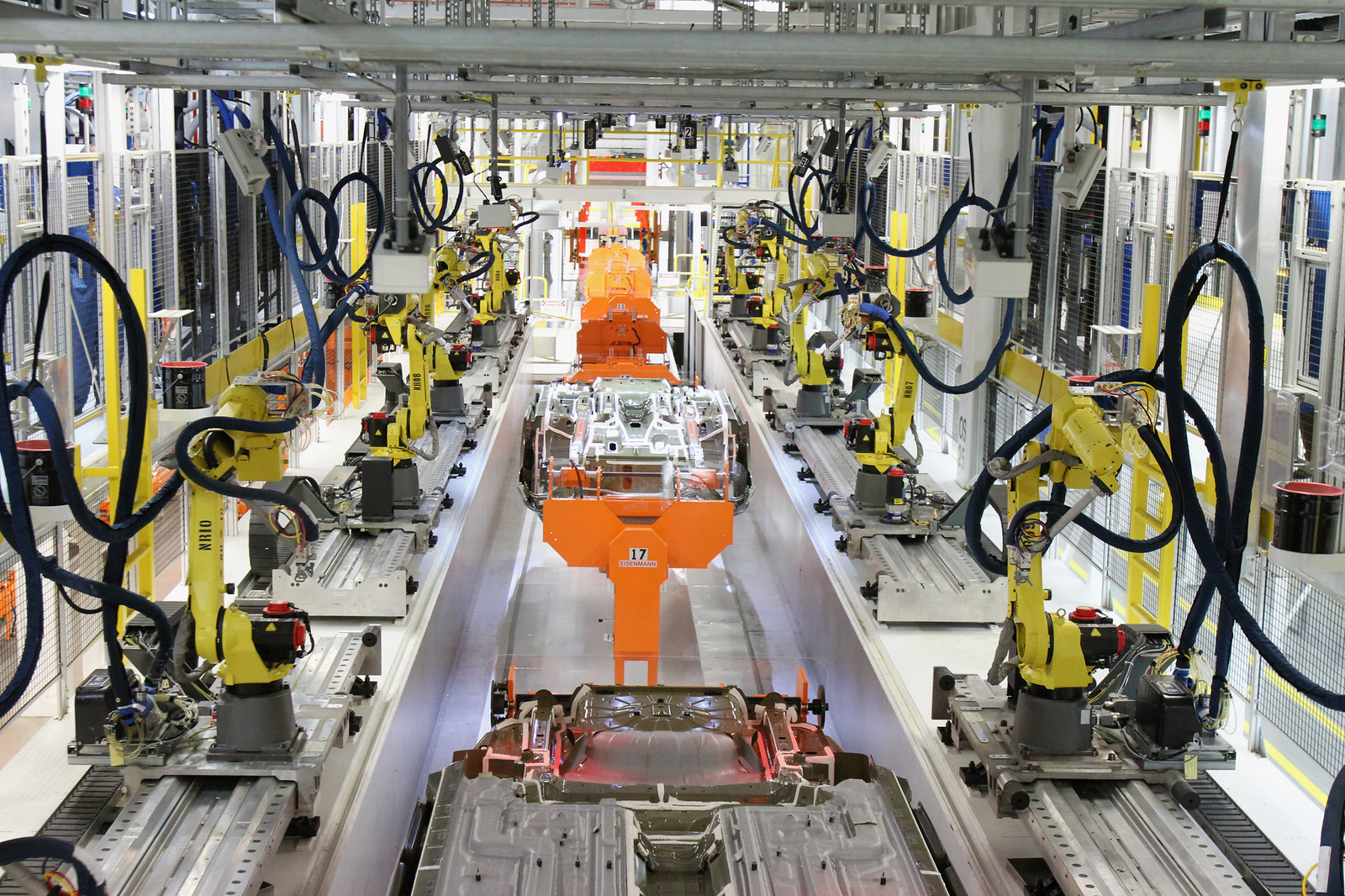

Maintaining big Kubernetes environments with factories

People are fascinated by containers, Kubernetes and cloud native approach for different reasons. It could be enhanced security, real portability, greater extensibility or more resilience. For me personally, and for organizations delivering software products for their customers, there is one reason that is far more important – it’s the speed they can gain. That leads […]