(You can also watch the video)

DevOps is a huge area – a mix of technology and culture. So what is required to become a DevOps engineer? What skills are required?

In the following parts, I’m going to show you a clear path for DevOps engineers, architects, and experts.

I have divided the article into 3 parts:

- In the first one, I will focus on the tools for DevOps engineers.

- In the second part, I will talk about skills for DevOps architects.

- And in the last one, I will reveal what is required to become a DevOps expert.

Engineer skills

Let’s start with engineering skills. These are 5 technical tools that are essential for DevOps engineers – starting from Junior to more senior positions.

1 – Kubernetes

Of course, Kubernetes is number one. Not only because it’s my favorite tool, but most of all because it has become the technology that changed the IT industry over the past few years.

It’s almost everywhere and has been adopted by most major companies. It’s ubiquitous as it can be used in the cloud as Kubernetes-as-a-service or the on-prem environments using regular servers.

Kubernetes combines knowledge from multiple areas. So it’s not a single skill but a group of many skills.

To know Kubernetes well you need to learn linux – after all, Kubernetes can be viewed as just a bunch of linux servers visible and managed via API, that’s it!

You must know how Linux works and how to set it up on a server and how to use the tools included in every distribution (e.g. sed, find, awk, top, ps, kill, etc.).

With Linux comes another technology related to Kubernetes – it’s containers. So you ought to know what a container is and how Docker or any other container engine works.

On top of that it is required for a DevOps engineer to understand Linux networking to be able to set up clusters, load balancers, control traffic, and use service meshes.

Before Kubernetes was born, it was Linux and cloud that had been the primary technologies used in DevOps. Now it has shifted to containers based on Linux.

So why learn Kubernetes in the first place?

If you know how to use it it’s just easier and faster to set up scalable environments that are responsive to the changing utilization of the apps.

It’s also easier to secure workloads as with Kubernetes you get a lot of additional tools (e.g. based on ePBF technology) and also because containers are faster to update.

With Kubernetes, you will be able to create even more complex multi-cloud environments and what is even more important – you will create hybrid solutions a lot easier. The latter scenario has become really popular recently.

But do you know what is the REAL purpose of Kubernetes and the reason why it has become so popular? It’s the speed of deployments. And by speed, I mean the whole process of getting code from a git repository to the production environment.

That’s why developers love Kubernetes and that’s why it’s your job to ensure effortless use of it within your organization.

People working as DevOps engineers or architects are expected to have expert knowledge of Kubernetes – others (i.e. developers) will turn to them for help on how to use its features efficiently.

I just can’t imagine modern DevOps without Kubernetes. Period.

2 – Cloud

Now here comes the second technology that is essential for DevOps. But this time it’s a tricky part because many companies prefer on-prem environments. However, cloud is a technology that can be provided by a specific vendor (i.e. AWS, Azure, or GCP) but also a new approach toward building IT environments. Some people call it cloud native and this term includes many technologies like the previously mentioned Kubernetes.

But getting back to the concept we all know as Cloud. One thing is clear – in fact, all clouds are similar. So all you need to do is get to know at least one cloud provider well enough to use the other ones.

Start by acquiring fundamental knowledge.

You need to know the networking part. Almost all vendors use the concept of Virtual Private Cloud (aka VPC or they use similar names) to isolate your workloads and services on the network level. You must know how to manage them, secure traffic with policies (e.g. security groups) and create various private and public zones.

All cloud providers have some kind of Identity and Access Management (IAM for short) to control access to their APIs for users and machines.

And while we’re discussing machines – regardless of how cloud vendors call them (virtual machines, instances or compute resources) they are just good old Linux servers. You need to know how to create them, distribute them among availability zones and prepare (or as some call it “bake”) images to enable the use of automatic scaling mechanisms.

To make your systems resilient you need to learn how to use proper storage technologies – that includes block storage attached directly to the instances and object storage used by apps and for multi-tier backup solutions.

All of these costs, sometimes a lot. So one of your responsibilities is to learn how to effectively control the cost of the services. And trust me – it can be tricky at times.

And how do you control your cloud environments? You use these fancy web interfaces just to grasp an overview of your environment. In other cases you use API and it should be your primary way of interacting with the cloud.

Do you know what funny thing about the cloud and Kubernetes I’ve noticed after working with them for many years?

By learning Kubernetes it’s easier to understand cloud and vice-versa! You will notice that there are common concepts with subtle differences. For example, in the cloud, you use virtual machines as building blocks for the services while in Kubernetes you use containers instead. However, you still interact with them via API, set RBAC rules, control traffic, scale workloads, load balance traffic and set up metrics and logging.

So learn cloud first as technology, then as a more universal cloud-native approach.

3 – Terraform

Moving on to another fascinating technology. It’s terraform. If you haven’t heard of it you must have slept for the last few years or you’re new to DevOps.

This project is one of the most known from the HashiCorp suite that also includes Consul, Packer, or Vault.

It’s the number one software for automation. It’s mostly used to automate cloud environments but it’s not only for that.

It’s more universal, as its pluggable architecture enables the use of 3rd party providers that extends its use to almost any kind of software that has some API (e.g. GitLab, GitHub, Grafana, Zabbix – there are over 2k of them).

Actually, it can replace another project that has been used widely in the infrastructure management area – Ansible.

I’ve used Ansible a lot, but in my opinion, it’s not the fastest and most convenient to use (especially troubleshoot) piece of software. It’s also not entirely declarative as Terraform and I believe in most cases you can use Terraform with its providers and modules.

Yes, modules. Beside providers Terraform community has created a lot of useful modules that reduce your work of automating all the things.

These modules can be especially useful when you don’t know the specifics of the particular cloud API. Terraform interacts with API directly and thus it requires pretty in-depth knowledge of it.

Terraform is so good at automating cloud infrastructure that Oracle chose it as a primary tool for automation in their OCI cloud. In my opinion, it just confirms the leadership position of Terraform as a tool for automation. And as I mentioned – not only automation of cloud environments but also various components installed on top of the automated infrastructure.

Together with another HashiCorp project – Packer – can be used to automate every bit of your cloud infrastructure. And by cloud, I mean both public and private environments as Terraform supports on-prem platforms as well – if you have OpenStack or vSphere running on your servers then Terraform will help you to automate it in a declarative way.

And when the time comes you can use your skills to automate the provisioning of the public cloud on any vendor. No more proprietary tools, and graphical interfaces – one tool to automate them all.

One of the most important parts of DevOps is automation and Terraform is the solution you must know to build highly automated environments.

4 – CI/CD

Do you know when developers appreciate having good DevOps engineers onboard their team? When they help them to make their deployment processes seamless and effortless.

And you achieve it by creating Continuous Integration and Continuous Deployment or Delivery processes.

That’s right – one of the most important tasks for DevOps engineers is to prepare infrastructure AND CI/CD processes so that development teams can focus on… creating the best code they can. You can’t help developers create better code but you can ensure they have everything they need to improve it without spending too much time on infrastructure parts.

With automated deployment processes it’s faster to start various processes before revealing this magnificent piece of software to the end users. These processes include packaging software into artifacts, containers,s or VM images and then testing them. These tests ensure the software is free of major bugs, security vulnerabilities, or other potential defects.

Trust me – developers don’t want to spend time learning infrastructure details and how all these containers, load balancers, cloud APIs, and virtual machines interact with each other. They just want to craft their awesome code.

And the less human interaction is involved in the process the better. No tickets and no private messages on Slack – they want a delivery platform that just works.

So which software should you choose to learn to create those CI/CD pipelines? Actually, it doesn’t matter, really! Pick any that is used in your organization or just seems more suitable for you.

My preferred one is Jenkins, but only because I’ve been using it for many years now and I’ve learned to leverage it to build almost any, even the most complex pipeline. However, It’s not the easiest piece of software.

Maybe in your case, GitLab ci or GitHub actions can be better and most of all easier to learn. Maybe you’ll choose Tekton when it’s mature enough, as it’s the project designed with Kubernetes in mind.

5 – Programming language

The last part is not another software project – it’s something that builds it.

To become a good DevOps engineer you need to know how to connect all of the building blocks I’ve mentioned so far together.

You need some programming language to create scripts or services (more on that later).

I recommend learning these three ones in that specific order.

I would start with Bash. It’s not a programming language per se, but the most popular shell found in almost any Linux distribution. So whether it’s an on-prem virtual machine, bare-metal server, cloud instance, Kubernetes node, or a container, the Bash shell is there.

You can use it to interact with a system or to create a script. Bash has everything you need for smaller tasks – starting from loops, conditional statements, and even built-in regular expressions.

Bash is also often used to create Dockerfiles with container images definition or in the cloud-init scripts executed at the startup of cloud instances.

With an amazing tool called curl you can even interact with various REST APIs and by using other commands available, it is possible to automate your processes on a decent level.

However, bash is far from perfect and can be insufficient for more complex scenarios.

Then comes Python. It’s the most popular language and quite easy to learn too. When Bash and curl are not enough, Python with its simplicity can save you from troubles. It’s very powerful when dealing with data and communication with services via API. Error handling, modules, and tests – these are the things hard to implement in pure Bash.

Python is a regular programming language and you can write anything you like. With so many available libraries you can even create your own Kubernetes operator. So it’s definitely the first choice for DevOps engineers. But not the only one.

Sometimes you need something more. Maybe you want to write a Kubernetes operator using Native SDKs or create plugins for Terraform or Vault. Then you might want to use Golang.

It’s the language of the first choice for cloud-native environments. Where speed and size matters choose Go. It’s often used to create single-binary container images that run on Kubernetes clusters.

Golang is even closer to the OS layer and although its syntax is more verbose it’s still quite easy to learn.

Architect skills

So those were skills required for a DevOps engineer and now let’s move on to DevOps Architect.

I’ve distinguished three such skills and they are focused on the proper design of platforms. To become a DevOps architect you need to have engineering skills and much broader experience in working with modern infrastructure.

While having StackOverflow or even AI systems like ChatGPT may help you solve day-to-day work as an engineer, they will not make you an architect. You need intuition which is developed over years of practice and coming up with ideas for improvement and optimization.

6 – API design

The first skill is API design. Now that you know how all the building blocks work, you need to put that knowledge into software.

I’m not saying that you need to become a software architect, but for an architect, it’s not enough to just know how to use the tools. You need to start automating all the things in a smarter and more unified way. You need to hide the complexity of inner works behind a unified API.

In short – engineers use APIs while architects design new ones.

The simplest example is the Kubernetes operator design pattern. Writing a new operator is in fact designing a new API. It’s also not that hard since Kubernetes is designed to be extensible by providing Custom Resource Definition and SDKs for developing controllers.

In that way, you can embed your knowledge in software and provide it as a service. That’s what defines advanced DevOps – automation with self-service using API.

Similarly, you can extend Terraform by writing custom plugins.

Of course, there are scenarios where you will need to write your service from scratch and that’s where your expertise in Python or Golang comes in handy. There are plenty of frameworks that will help you to build your API (e.g. Flask, Gin, and Revel to name a few).

I find this form of automation much more mature. The skill of designing new APIs is required to build complex systems on a much larger scale. Not everything can be done with existing tools and in those scenarios expertise in that area is crucial.

7 – Security

Now let’s talk about an often neglected topic – security. Yes, security has always been a “top” priority, but I think it’s often more about what should be done rather than the sad reality of what is actually being done.

That’s why this important job is a part of DevOps architect responsibilities. And once again – it’s not about the tools but about the real impact on the security of systems working inside an organization.

To keep systems secure you need to work on two areas: learn what technology and practices to use and also how to make sure these practices are in use within the organization. No tool will be good enough if there’s no awareness and cooperation embedded in the culture.

I could have put security in the engineering skills and indeed all engineers should be able to make their systems secure. The architect’s role is even more important – they have to work on the non-technical aspects as well.

And what is the technical part? From DevOps’ perspective, it’s mostly about putting security practices in code. In the old days, it was mostly about a myriad of documents describing how to secure the systems – now it’s about describing it in the development, deployment, and maintenance processes.

So starting from simple things like OWASP Top 10 through well-known least privilege principle, Multi-factor authentication, auditing, etc.

With Kubernetes, it’s also about using available methods like seccomp profiles and SElinux for containers, automated scanning of the application artifacts and container images, or enforcing best practices on the API level with OPA or Kyverno.

I could list dozens of projects that focus on security for all types of environments. But I will repeat once again – it’s not the tools that will secure your systems, but the approach that is a part of the culture.

And I believe it’s a job for DevOps Architect or DevSecOps Architect if you will.

8 – Everything as Code

Alongside security comes another very important practice that is crucial for DevOps Architects. It’s keeping everything as code. In other words – moving from operating systems to designing them and putting every piece in software. Recently GitOps has emerged as the practice for the management of Kubernetes environments and sure it follows this approach, but I see it more broadly. I find it mandatory for all parts of DevOps.

This will force you to think in other, more advanced terms.

Scalability is possible only when virtual machine or container images are available and the process of baking them is presented in code.

Think about repeatability – do you think it’s achievable without coding your infrastructure, CI/CD processes, and aforementioned security?

The ease with which all crucial parts of the platform can be updated just by changing a few lines of code also significantly strengthens its security.

And don’t forget about testing. With everything kept as code, every change can be tested and easily reverted. This requires writing test scenarios but it’s a small effort compared to the benefits it brings.

So to achieve all those things and bring your platform management to the next level you need to put everything in a git repository. You will decouple infrastructure management from app development, increase transparency, enable rollbacks and allow for easy experimentation with new features.

Expert skills

There are two skills left I want to speak about. They are non0-technical or soft skills if you will. I decided to mark them as expert skills to emphasize how important they are and also how rarely used.

Does it mean they should be learned after the previous ones? On the contrary – I believe they should be developed as early as possible in your career. You will soon discover (if you haven’t done so already) that tools don’t matter that much and there are things of a lot more importance. They are, however, much harder to master, but will yield incredible results over time.

9 – Active listening

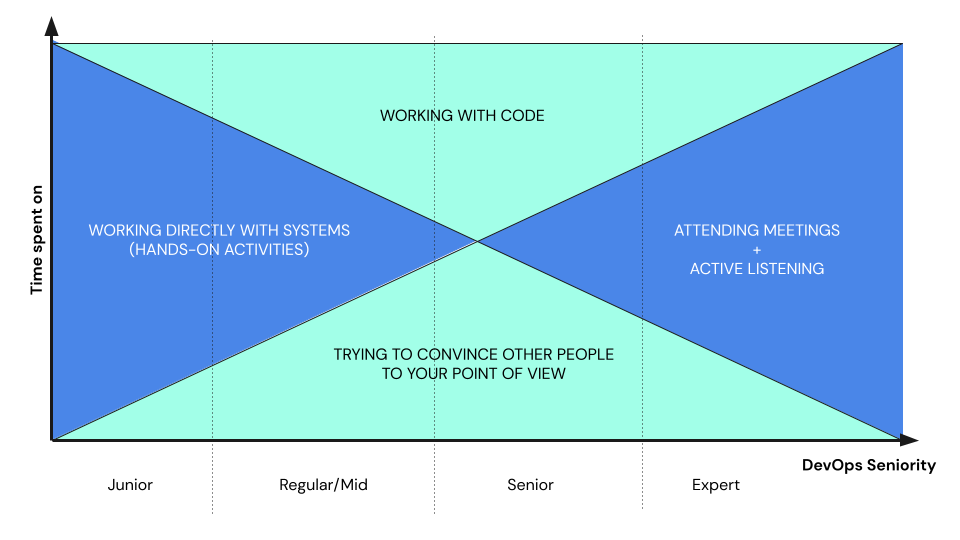

The real reason why these expert skills are so important is visible on the diagram below.

When your career progresses from junior to senior and then to expert.

You start with learning by doing, implementing small solutions, and and doing some manual tasks and then slowly you start to notice that indeed, working with code is more efficient and you even master that approach.

Then you attend more and more meetings during which your knowledge, however appreciated, doesn’t matter that much. You need to start listening and listening very carefully.

That seems easy, isn’t it? Well, not exactly. How many times have you been in a discussion when someone was talking and in your mind you were preparing a clever response or you were doing something instead of really listening to what the other person was trying to convey.

You must learn to pick up essential elements from lengthy monologues or heated discussions.

During meetings ask the right and clear questions. Try to understand before intuitively start to judge and offer solutions.

The practice of effective listening is underappreciated, probably because it requires patience and humility. That’s why it should be the skill you’re developing constantly.

There will be more AI systems like ChatGPT, but nothing, really nothing will ever replace empathetic listening. That’s how you build relationships and find the problems worth solving with your expertise and energy.

10 – Concise presentation

The last skill goes together with the previous one.

To be recognized as an expert you need to be able to present your ideas most clearly and convincingly.

Like in the career progress diagram – the more senior you become the more meetings you attend. But what do you do during these meetings? Do you listen only? As an expert in your field, you will be expected to present or actively take part in the discussion.

It’s impossible to be a valuable participant without previously gained knowledge and experience. The “fake it till you make it” approach will be quickly debunked.

What do real experts do? They simply present complex ideas. Knowledge is not enough, but it’s a prerequisite. You use your expertise to inform other people about possible scenarios or to influence their decision.

And to do it right you cannot dump everything you know on the table – it’s overwhelming and counterproductive.

Concise presentation is a skill that can be and, in my opinion, must be developed to effectively influence and act as a real expert.

This skill is crucial for internal use and some may use it externally during public presentations. Not every expert enjoys or wants to do public speaking, but people appreciate it when they do. It’s because there are many people with less experience at conferences presenting their ideas, but they often are just less interesting

So how do you become better at concise presenting? First practice, but also care about what you feed your brain with. It’s the “garbage in, garbage out” effect.

Our brains need to be fed with valuable content to be able to build up the base for something that distinguishes experts – solving problems with intuition rather than easily available knowledge.

Conclusion

That’s it. I hope now you know what is required to become a DevOps Engineer who knows the tools, DevOps Architect who is able to design complex environments or an expert who can help in implementing DevOps practices across organizations.

Use it to grow your career. The world needs more savvy people to help publish more innovative solutions.